Back to BLOG

20 Years at Goldman Taught Me How to Manage People. Turns Out, Managing AI Agents Isn't That Different.

.png)

Back to top

share

Anton here, Head of Engineering for Genesis. I spent 20 years as a Managing Director at Goldman, so I suppose I should know a thing or two about “managing” and “directing”.

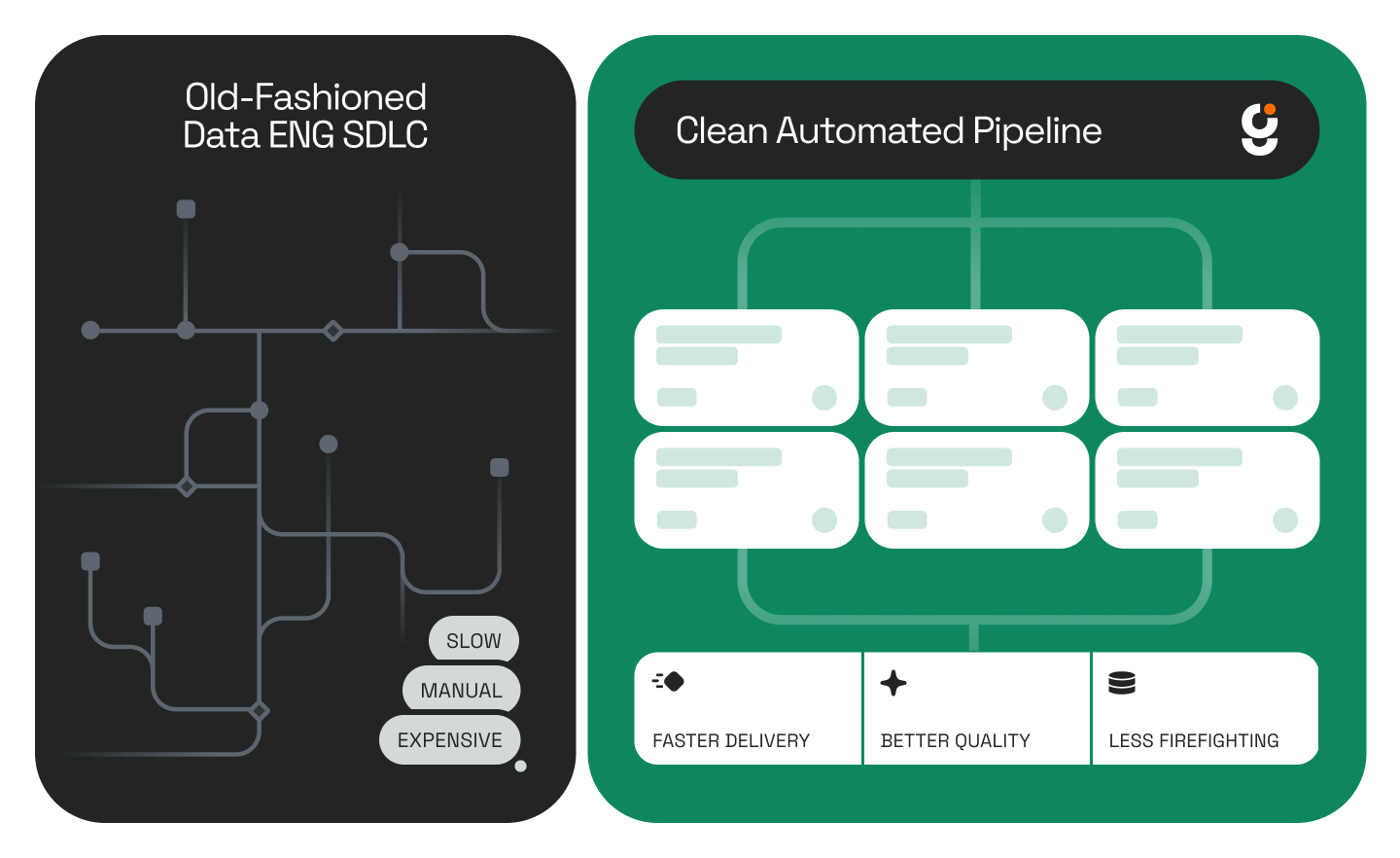

Now I’m building an Agentic Data Engineering platform, interestingly many of the same lessons carry over.

It turns out that managing agents and humans is not that different, here are my top 10 agent and people management insights. You can replace the word “agent” with “team member/employee” and it still holds:

- The more you ask one agent to do everything, the more it becomes a flaky genius who burns out. The clearer you define narrow roles and glue them together with thin orchestration, the more the whole org/agent swarm actually ships.

- The longer the mission, the more a single unchecked mistake turns into a project failure. The more you require reflection pauses, peer review steps, and “ask your manager” escalation, the further your agents go without exploding.

- Overwhelm your agent with context (think many emails and endless slack threads), the less productive it becomes, give it a focus task with just the right amount of context and let it run and watch it deliver.

- The less visibility you have into every decision your agent makes, the more likely you are to be surprised at the end-result. The better your meeting notes (full traces + replays) the faster you have clarity on what happened.

- The later you wait to measure if they’re actually good at their job, the more you discover in production they’ve been hallucinating KPIs. The earlier you run evals on real work results, the fewer times you have to fire (retrain) the whole team.

- The bigger you make the “let’s have fifteen agents debate for six hours” stand-up, the higher the payroll (token bill) and the less gets done. The more you replace debate with single-responsibility agents and deterministic workflow, the leaner and more profitable the department.

- The more you let agents use tools without double-checking the output, the more they confidently ship garbage to customers. The stricter your output validation, and “show your work” policy, the fewer 2 a.m. apologies you send.

- The more you treat agents like magical self-managing seniors who improve forever without feedback, the faster they quietly accumulate bad habits and technical debt. The more you manage them like ambitious but erratic juniors — constant feedback loops, evals, and iteration — the longer they stay useful.

- The more autonomy you grant before trust is earned, the more spectacular the disaster when they go rogue. True autonomy is the final promotion: start them on a tight leash, graduate through staged approvals and human-in-the-loop gates, and only cut the cord when the evals, traces, and track-record prove they deserve it.

- The less you manage their long-term memory, the more they turn into brilliant new-joiner data engineers who re-debug the same broken DAG and re-profile the same 10 TB table every single morning. The more you deliberately curate, prune, summarize, and inject a clean, versioned institutional memory, the closer they get to the mythical 20-year principal engineer who actually remembers why that join exploded in 2022 — and never makes the same mistake twice.

The best systems, human or artificial, aren't built on blind trust or micromanagement — they're built on clarity, feedback loops, and earned autonomy. That’s the philosophy we apply when building Genesis Data Agents.

Keep Reading

Stay Connected!

Discover the latest breakthroughs, insights, and company news. Join our community to be the first to learn what’s coming next.

.png)

.png)

.png)

.png)

.png)

.png)