Context Management: The Hardest Problem in Long-Running Agents

If you’ve ever built an agent that runs beyond a few simple steps, you’ve seen what happens when it starts to lose the thread. It repeats itself, makes contradictory decisions, or begins solving problems it already closed earlier in the workflow. That isn’t the model being creative or unpredictable — it’s the model running out of working memory.

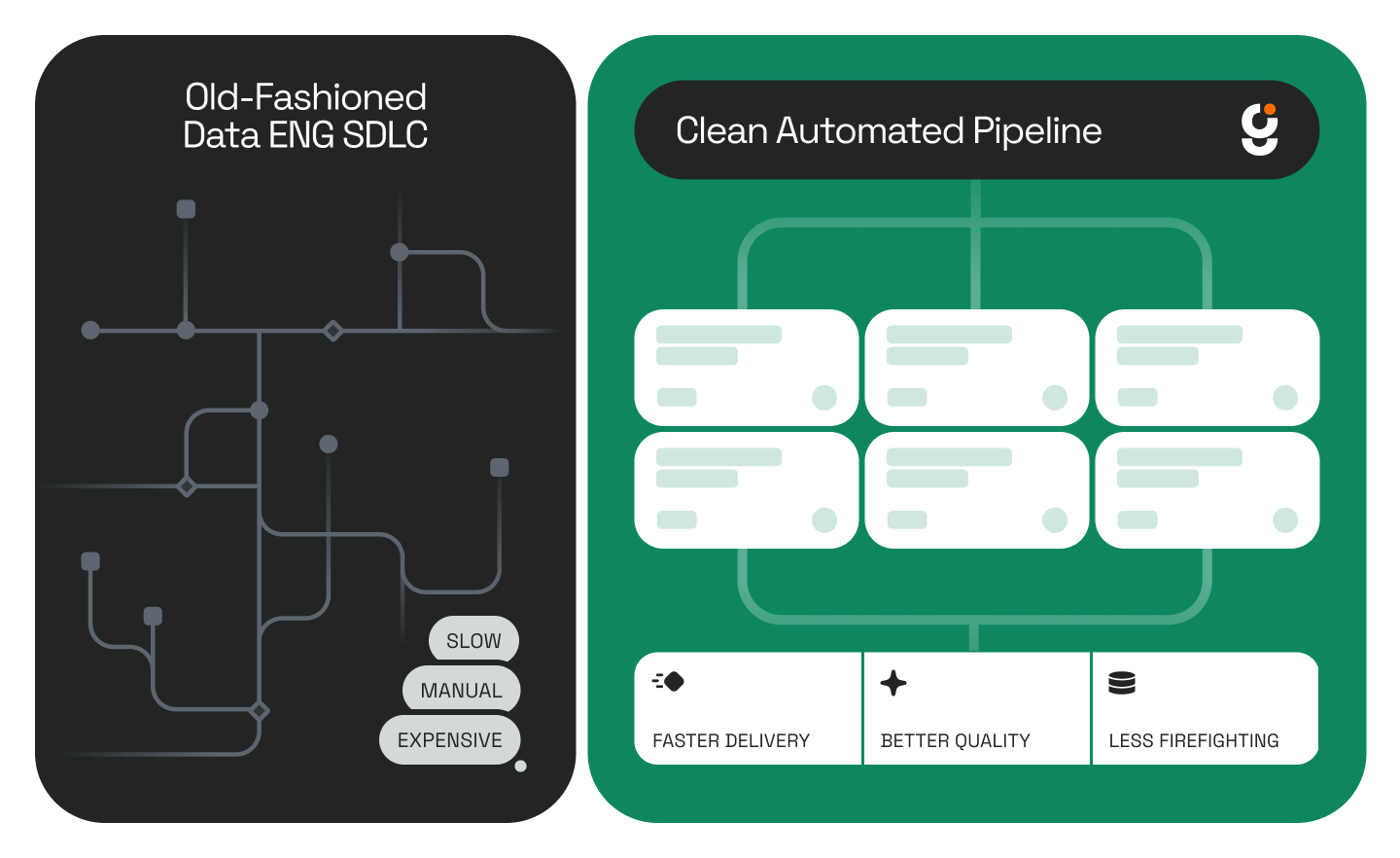

In Part 1 — Blueprints, I wrote about how we give agents a method to follow, a sequence of reasoning steps that mirror how real engineers work. Blueprints solve the process problem. But once an agent starts executing those blueprints for hours at a time — building pipelines, transforming data, or mapping fields — it hits the next bottleneck: memory.

Every modern LLM, no matter how large, still has a sharply limited working window — roughly 200,000 tokens on average. That might sound like a lot, but it vanishes fast when every step involves long SQL traces, tool outputs, or multi-line logs. Once that memory fills up, the model starts to blur together what’s current and what’s old. It begins to improvise, not because it’s wrong, but because we’ve overloaded it with context it can no longer distinguish. Most of what engineers call “hallucination” in long agent runs is really just this: context overflow disguised as reasoning failure.

Each agent call is an isolated event. The model itself doesn’t remember what happened before; it only knows what we include in that single payload. So the challenge isn’t about making the model smarter — it’s about deciding what portion of the project’s history to reload for each step so it has just enough information to continue correctly. We call this balance the Goldilocks context—the minimal set of facts the agent needs to do its job, no more and no less.

.png)

At Genesis, every agent call starts with a reconstructed context built dynamically from the blueprint’s current state. If the next task is to validate a Snowpark function, the agent doesn’t replay the entire project history. Instead, it receives the function code, the relevant inputs and expected outputs, a compact summary of recent activity, and links to any supporting Markdown notes or Git files. This way, the model’s working memory is clean, targeted, and immediately relevant — just as a human engineer keeps only a few key ideas in mind when focusing on one piece of code.

When a blueprint runs for hundreds of steps, carrying every prior message forward becomes impossible. Rather than feeding the full conversation back into the model, we compress it into structured summaries and persist the full raw data in Markdown. Those summaries capture the essential decisions, the state of variables, and any unresolved issues.

Later, when the agent needs to revisit something it did earlier, it doesn’t rely on recall from memory. It simply reopens its own notes, just as an engineer would scroll through an old notebook before resuming a project. That simple act — documenting as it goes — turns the agent’s workflow into something modular, recoverable, and inspectable by humans.

Not every step benefits from shared context. Some problems require isolation and a smaller mental space. When the agent reaches a deep, high-concentration task—such as writing a transformation or debugging a specific function — we spawn a subthread, a temporary working environment with only the essential tools and inputs. It’s the “locked room” concept: a quiet space where the agent can reason clearly without distractions from previous steps. Once the task is complete, the subthread merges its results and metadata back into the main thread, preserving continuity without carrying unnecessary weight.

Compression alone keeps the system efficient, but it doesn’t solve relevance. Agents must also know how to retrieve what matters. Each one maintains awareness of the files, tables, and summaries it has produced, and when a future step refers to them, it can re-load the specific resource from storage. This keeps working memory small but restores continuity at the exact point needed. The model doesn’t need to remember everything — it only needs to know where to look.

Every model handles memory differently. Some can sustain large windows but lose precision as they fill up; others reason more accurately within smaller slices. We continuously adjust compression and recall strategies for each model family to maintain a consistent quality of reasoning. Context management is therefore not a fixed algorithm — it’s an evolving discipline that changes with each generation of LLMs.

All of this happens behind the scenes. Engineers using the system don’t have to think about token budgets or prompt truncation. The platform handles it automatically, keeping the agent focused and coherent over long sessions. From the user’s point of view, the agent simply “remembers what matters.” From ours, it’s a disciplined orchestration of context refresh and recall happening continuously under the hood.

Blueprints define what an agent should do. Context management ensures it can keep doing it long after most systems would have lost coherence. When you get context wrong, the blueprint collapses after a few steps. When you get it right, the agent can run for hours, build complete pipelines, and stay logically consistent from start to finish. That’s the real engineering challenge in agentic systems today — not generating text, but sustaining thought.

.png)

.png)

.png)