Keep Watching

Agent Server [3/3]: Agent Access Control Explained: RBAC, Caller Limits, and Safer A2A

The next question then is the agents have access to the underlying systems now. That's great. They can use that access to do their own recurring activities. Maybe every five minutes they're checking this airflow to see if the jobs are going okay. So there's some activities the agents will do that are scheduled or that are autonomous that don't involve a user on the fly at least talking to the agent. And then there's other activities where a user will be interacting with the agents. And that user could be a human user or it could be another agent.

The A2A Protocol

So agents have a protocol called A2A, agent to agent. It's starting to come into play as agents take over parts of what companies are doing. Agents need to talk to each other and there's a protocol for that.

Role-Based Access Control for Agent Interactions

So regardless of whether it's a human user or another agent trying to interact with one of the data agents, there needs to be another set of rights and roles around that interaction. So if a human from group A comes and tries to talk to an agent, and that could be through a variety of channels. That could be through Slack. It could be through Teams. It could be through assigning an agent a ticket on Jira or tagging an agent in a Google document. Lots of different potential paths that human or non-human could try to interact with an agent.

The agent needs to have some kind of role-based access control around which humans or groups of humans or other agents or groups of other agents it's allowed to interact with at all, and then within that, what kinds of things that that other human should be able to tell that agent to do and what kinds of things that agent should be able to respond back to that human or non-human with.

Decoupling Agent and Caller Access

So basically what that implies is a decoupling of the agent's access to underlying resources and the caller's access, caller being the human or non-human, say a human reaching out through Slack to an agent, that human in Slack might have access to all kinds of stuff or nothing at all in the enterprise. The agent has its own access to stuff. So there has to be a kind of a remediation layer in the middle where the agent is smart enough to decide whether somebody telling it to do something is that person authorized to do that. Should the agent respond at all? If so, should the agent be able to do something A versus thing B versus thing C? There's a whole variety of thoughts that need to go into account.

Why OAuth Inheritance Is Not Appropriate

And what is generally, in our opinion, not appropriate is to somehow inherit the calling user's access on the fly and have that agent step into the shoes of that user and then use that agent's token going downstream. Like things called OAuth and other things are set up in general on the web and other places to do exactly that. But here we actually think that's not the best thing to do. You don't want, just because I talk to an agent, you don't want that agent to suddenly have all the capabilities and powers on my enterprise that I do. Because that's, it might seem convenient, but it's not appropriate for a variety of reasons. You might not trust the agent to do the same things that you trust me human to do.

The Multi-User Context Problem

And then there's a question of, well, what if I'm in Slack and I tell an agent to do something? And if I do that in a, even in a private Slack DM to an agent, I could add another human to that or another agent to that Slack channel, to that Slack conversation. And then suddenly there's another human there. Also potentially able to say things to the agent or at least see what the agent's telling me. Or if you have an agent in a Google doc that has broad access or even narrow access, or you have an agent in a Slack channel, a big one or small one, it doesn't matter. There's multiple people from an organization or maybe outside an organization in Slack, for example, sometimes, who can, you know, at least observe what that agent is doing with that human.

Agents as Independent Actors

So, we think it's important for agents to have their own, their own access as if they were people. Just the way multiple humans can interact in the Slack channel, even though each of those humans has different access to underlying things in an organization, like there's no general concern immediately of those humans talking to each other in Slack or in Google Docs because you know the humans are going to use their brains around whether or not they should be posting stuff in Slack. The same exact thought pattern applies to interactions between agents and in the outside world.

So role-based access control to agents in terms of humans and other agents talking to those agents, what those humans and other agents can do with those agents, very important topic. Just the industry is just starting to think about that. It's not as obvious as people might initially imagine. It definitely is a bad idea for those agents to just inherit the rights of whoever is talking to them. Some people are doing that. We think that's not good.

Provisioning Agent Access to Enterprise Resources

And then a whole other set of discussions I mentioned at the beginning of how agents themselves are provisioned to have access to stuff in the enterprise. You're going the other direction. How do you set them up? Are they humans? Do you set them up the same way you set up humans? Do you give them the same rights as humans? Do you set them up as service accounts? How do you control them? How do you monitor them? How do you provision them. And in a lot of cases, if you set them up as if they're humans, you can monitor them and control them the exact same way you would control humans, which is generally easy for an organization because they already have systems in place to control and monitor what humans are doing.

Conclusion

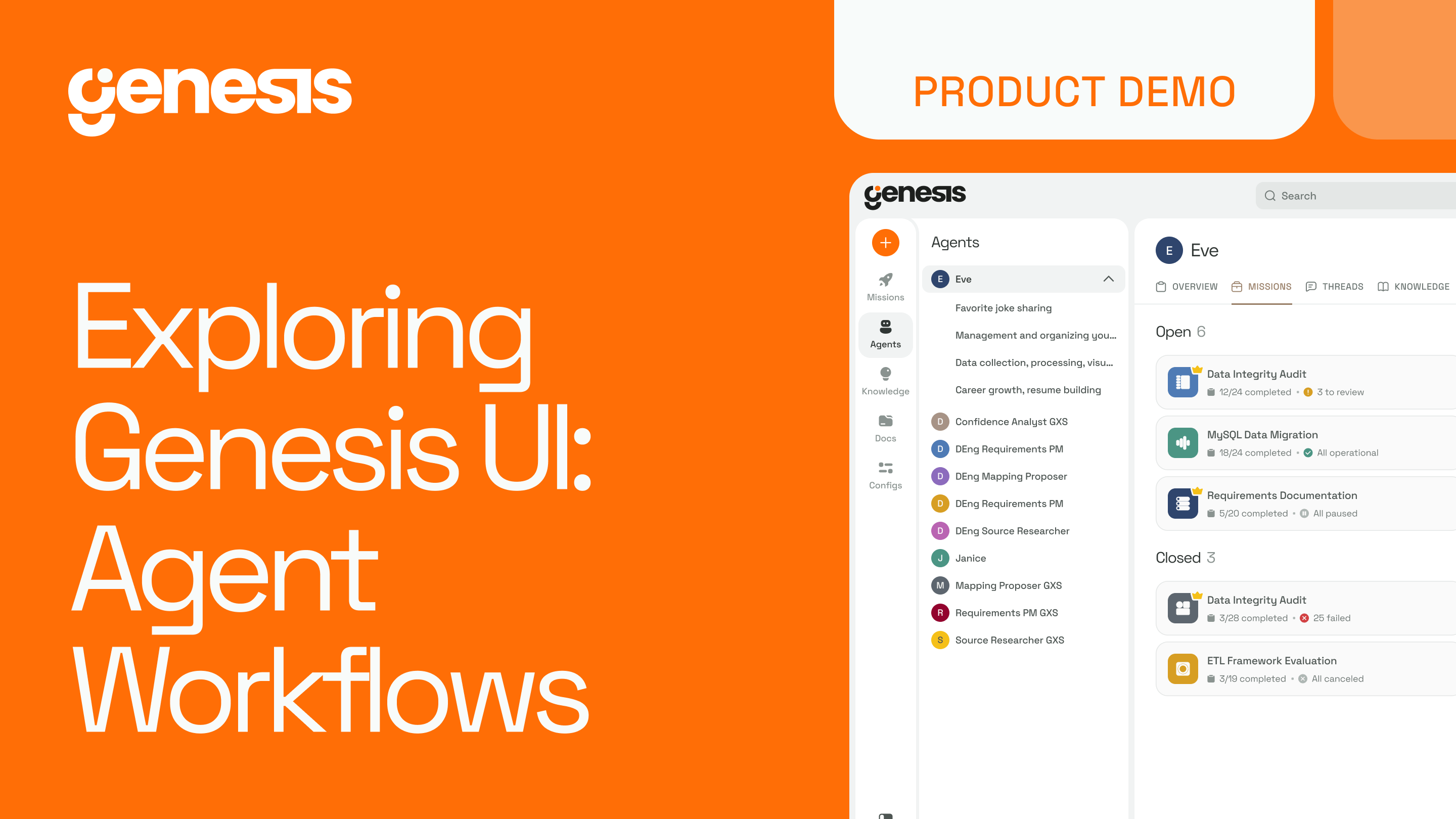

So that's the high level view, if you will, of agent access control, both from the agent downstream to enterprise applications and from other people and systems and other agents and humans like into the agents themselves. And that's a core part of what the Genesis agentic server is managing, monitoring, and controlling.

Summary

Keep Watching

Stay Connected!

.png)

.png)

.png)

.avif)

.png)